Cover image taken from Unsplash

This post of part of API performance improvement mini series 🚀

- Improve API performance with CDN

- Improve API performance with application caching (this post)

Common web caching

Suggestion from a friend on comments

The most common layers of web caching we have today:

- Client-side caching (PWAs, localStorage etc)

- Edge caching (CDN). Most powerful for static content caching near to the user's location.

- API or reverse proxy caching (AWS API gateway, Varnish, Kong/Traefik have plugins too)

- Application caching (Run your own shared Redis/Memcache)

- Per-instance caching (Memory-based caching)

- Database caching (DynamoDB DAX, database query cache)

Background

On the previous post, we have discussed that API performance can be improved with CDN. This confuses when to choose between CDN and application caching.

What is application caching

The caching that happened on application level (API server), for example memory cache or database cache (normally with key-value store database)

What is the difference with CDN and when to use

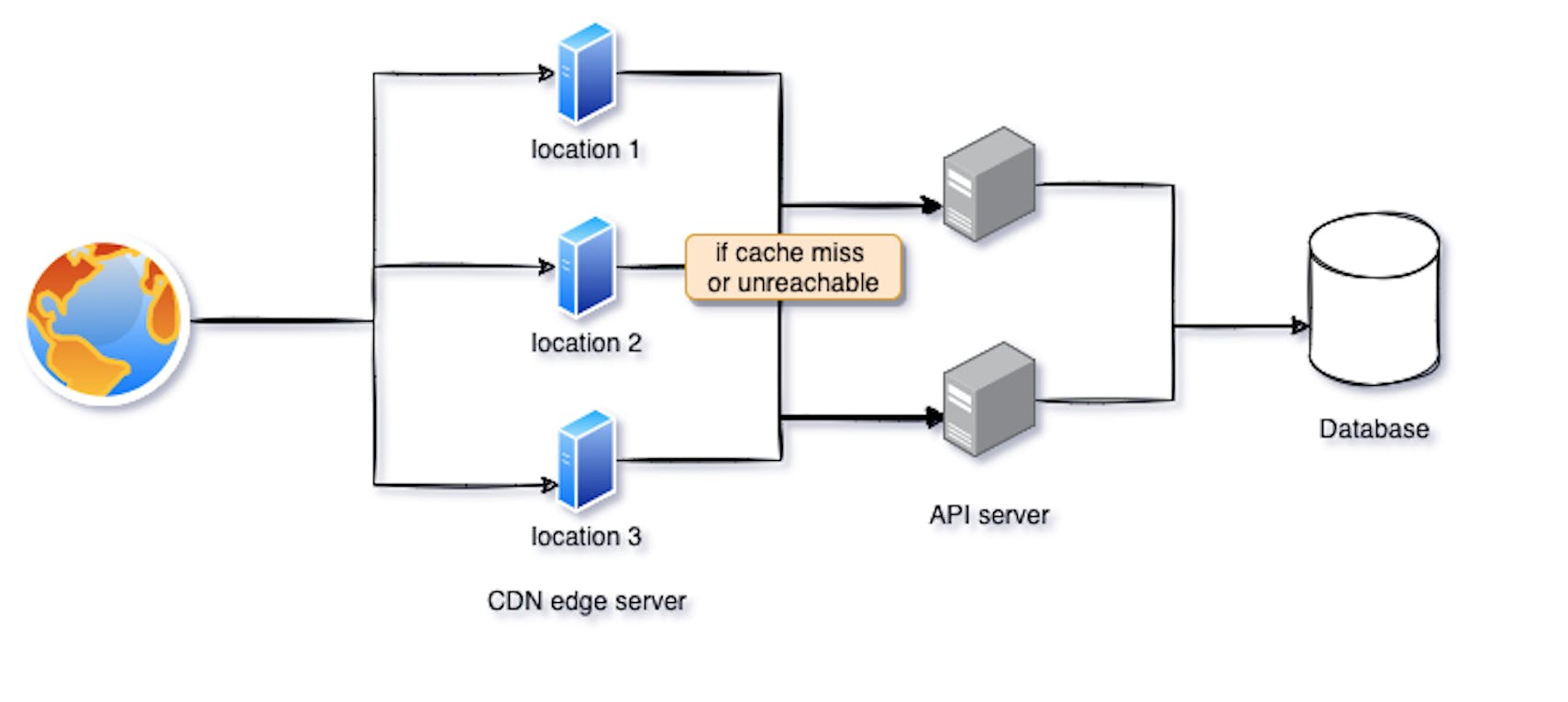

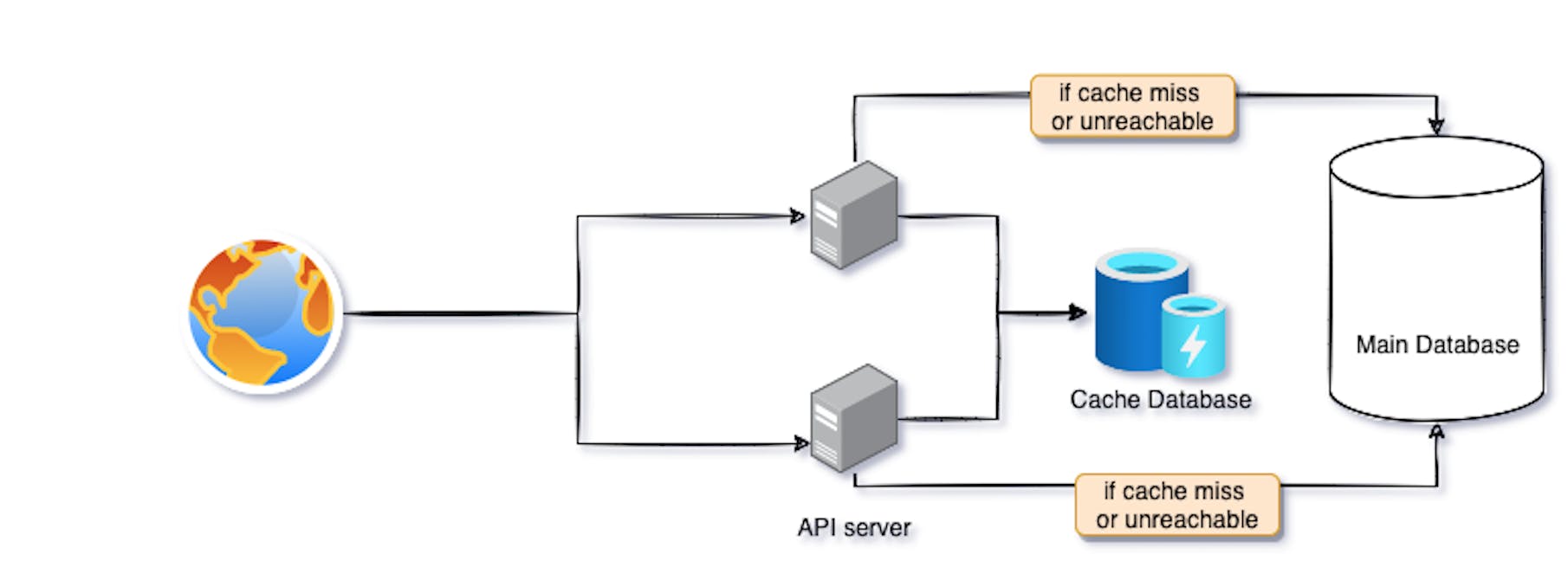

CDN would not direct incoming requests to API server if the cache is hit.

whereas in application caching, incoming requests will always handle by API server, but only to query main database, if cache database do not have record (cache miss or unreachable)

Here is my opinion

Use CDN when:

- data is available for public (live feeds for sporting events, traffic, registration platform, hot sales)

- not privacy sensitive

Use application caching when:

- Need more control of the data stored (setting data, clearing data)

- Privacy sensitive

- Private content (content for authenticated users)

But of course, we can use both, if that fit the use case.

As you go nearer to the user, the cache is more powerful (faster) but harder to control and invalidate. As you go nearer to the data source, the cache is less powerful (less network latency is saved), but easier to control and invalidate.

How to select which API to cache

Code snippet below is written in Go with fiber, details on the code are omitted, only show the related code to set the cache

Source code is available at cncf-demo/cache-server

I use BadgerDB with memory only mode for cache storage. It is an embeddable, persistent, and fast key-value (KV) database written in pure Go. Reason for choosing BadgerDB due to frictionless setup, without have to deal with setting up a database, and at the same time demonstrate application caching. It suitable for single server setup (single instance or replica), if you have more than one server setup, may consider to use Redis, Memcached or other alternatives.

We can utilise cache middleware for the caching, it supports memory caching and also different storage drivers.

But it doesn't support customisation like define specific time-to-live (TTL) for each API. I write a middleware to support this, and too long to paste it here, may check out the source code on the implementation.

Here is the flow of how the middleware can be written.

- Check if has bypass cache mechanism (has

Bypass-Cacheheader orrefreshquery param) - Check if it is a GET request

- Check if cache storage has record

- Return the previous response if has record

- Create record if do not have record

- Add

S-Cacheheader to indicate cache status with valuemiss,hit, orunreachable

After we write the middleware, the usage is straightforward

// cache for 5 seconds

app.Get("/server-cache", allowServerCache(5), func(c *fiber.Ctx) error {

// simulate database call

// for 200ms

time.Sleep(200 * time.Millisecond)

return c.JSON(fiber.Map{"result": "ok"})

})

Deploy the code to test

You can deploy to anywhere to test, here is showing example of deploying to Kubernetes. Optionally can run on localhost

cd examples/cache-server

kubectl apply -f cache-server.yaml

Verify the response header with curl, with cache status that we set earlier

curl IP_ADDRESS/server-cache -v

# only show relevant values are here

< HTTP/1.1 200 OK

< Content-Type: application/json

< Content-Length: 15

< Cache-Control: no-store

< S-Cache: hit # cache status

<

{"result":"ok"}

Test the API performance

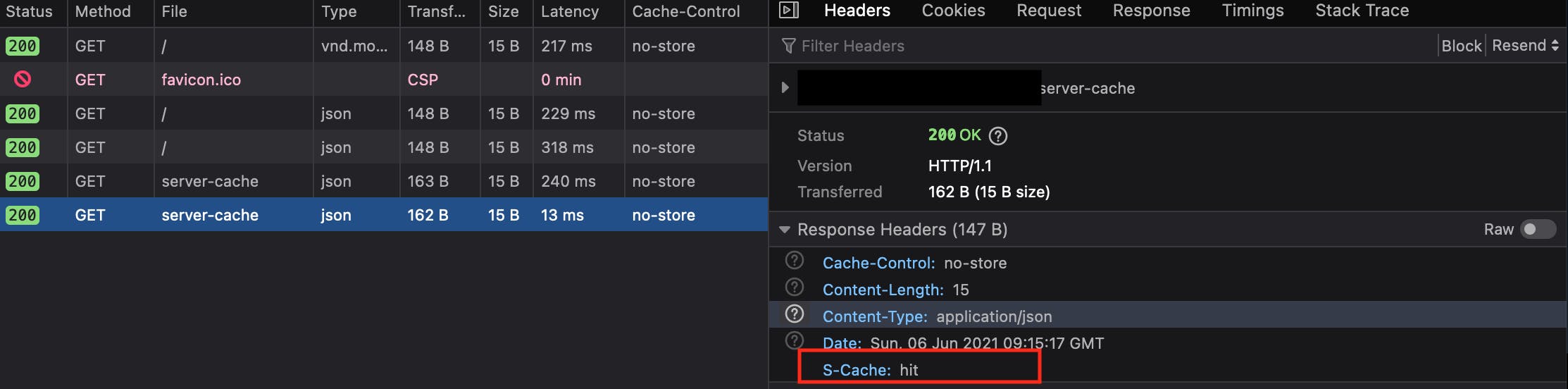

Visual testing

Open up the browser with the public IP address, and submit request to / and /server-cache , twice on each API.

/ is without cache, /server-cache set to cached for 5 seconds

Verify on the Cache-Status header for cache status

The result shows that non-cacheable API (/) results in different response time, mainly caused by the network latency, but not performance improvement. Meanwhile cacheable API (/server-cache) improved from 240 to 13ms, about 19X faster.

Benchmark test

Note that I am running the test on local terminal, so the factor of internet speed is ignored. Benchmark is tested with bombardier with the following parameters

-c 200 -n 100000

-c Maximum number of concurrent connections

-n Number of requests

The API server only run on one replica, with the following resources

resources:

requests:

memory: "64Mi"

cpu: "250m"

Here is the benchmark result for non-cacheable API /, average with 922 requests per second, took 1m49s to finish, and average latency 218.86ms

$ bombardier -c 200 -n 100000 IP_ADDRESS/

Bombarding IP_ADDRESS/ with 100000 request(s) using 200 connection(s)

100000 / 100000 [=====] 100.00% 911/s 1m49s

Done!

Statistics Avg Stdev Max

Reqs/sec 922.00 827.36 5866.92

Latency 218.86ms 9.84ms 369.95ms

HTTP codes:

1xx - 0, 2xx - 100000, 3xx - 0, 4xx - 0, 5xx - 0

others - 0

Throughput: 191.66KB/s

Here is the benchmark result for cacheable API /server-cache, average with 3507.45 requests per second, took 28s to finish, and average latency 56.79ms

$ bombardier -c 200 -n 100000 IP_ADDRESS/server-cache

Bombarding IP_ADDRESS/server-cache with 100000 request(s) using 200 connection(s)

100000 / 100000 [=====] 100.00% 3510/s 28s

Done!

Statistics Avg Stdev Max

Reqs/sec 3507.45 3205.75 16652.32

Latency 56.79ms 34.33ms 361.68ms

HTTP codes:

1xx - 0, 2xx - 100000, 3xx - 0, 4xx - 0, 5xx - 0

others - 0

Throughput: 828.42KB/s

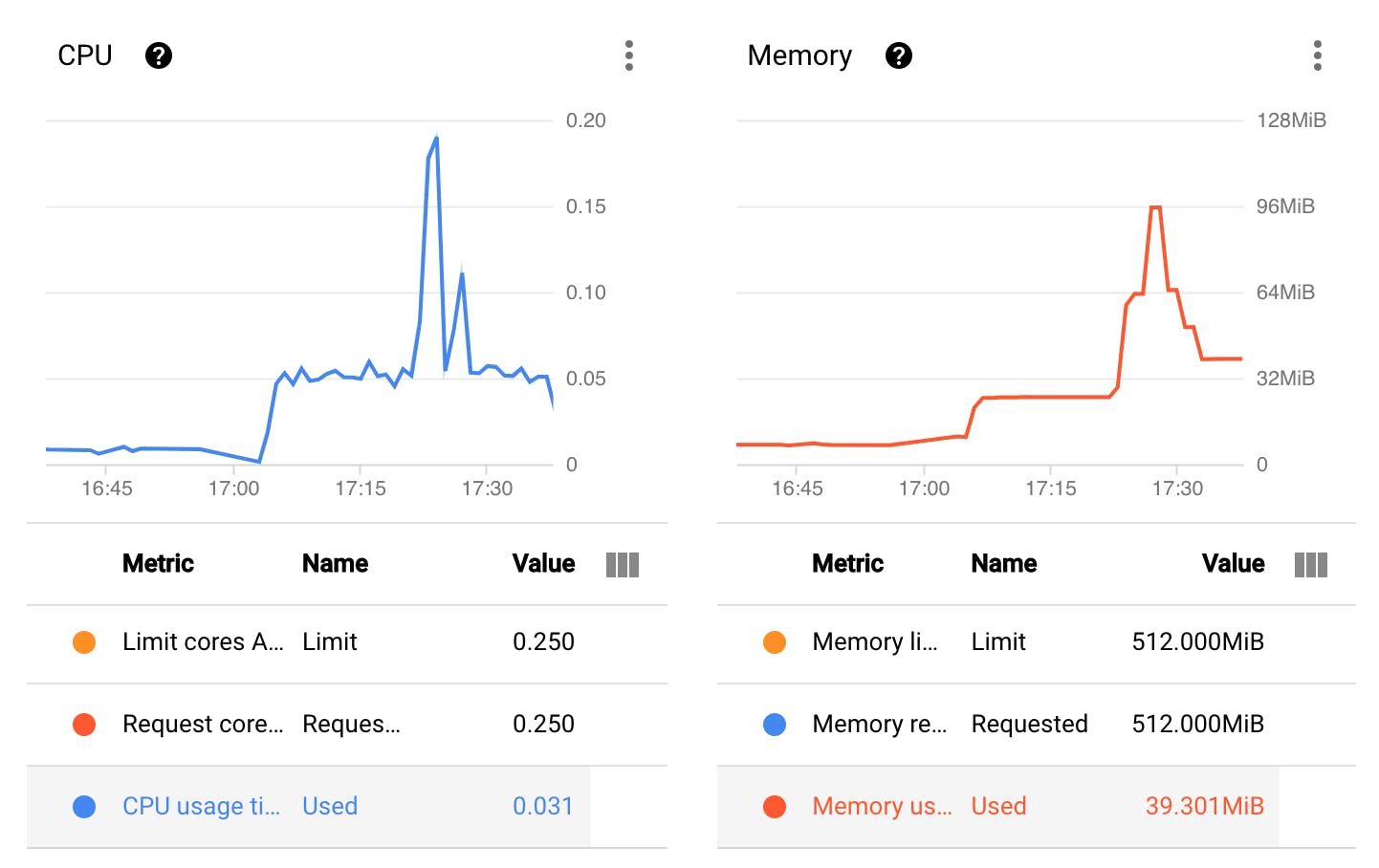

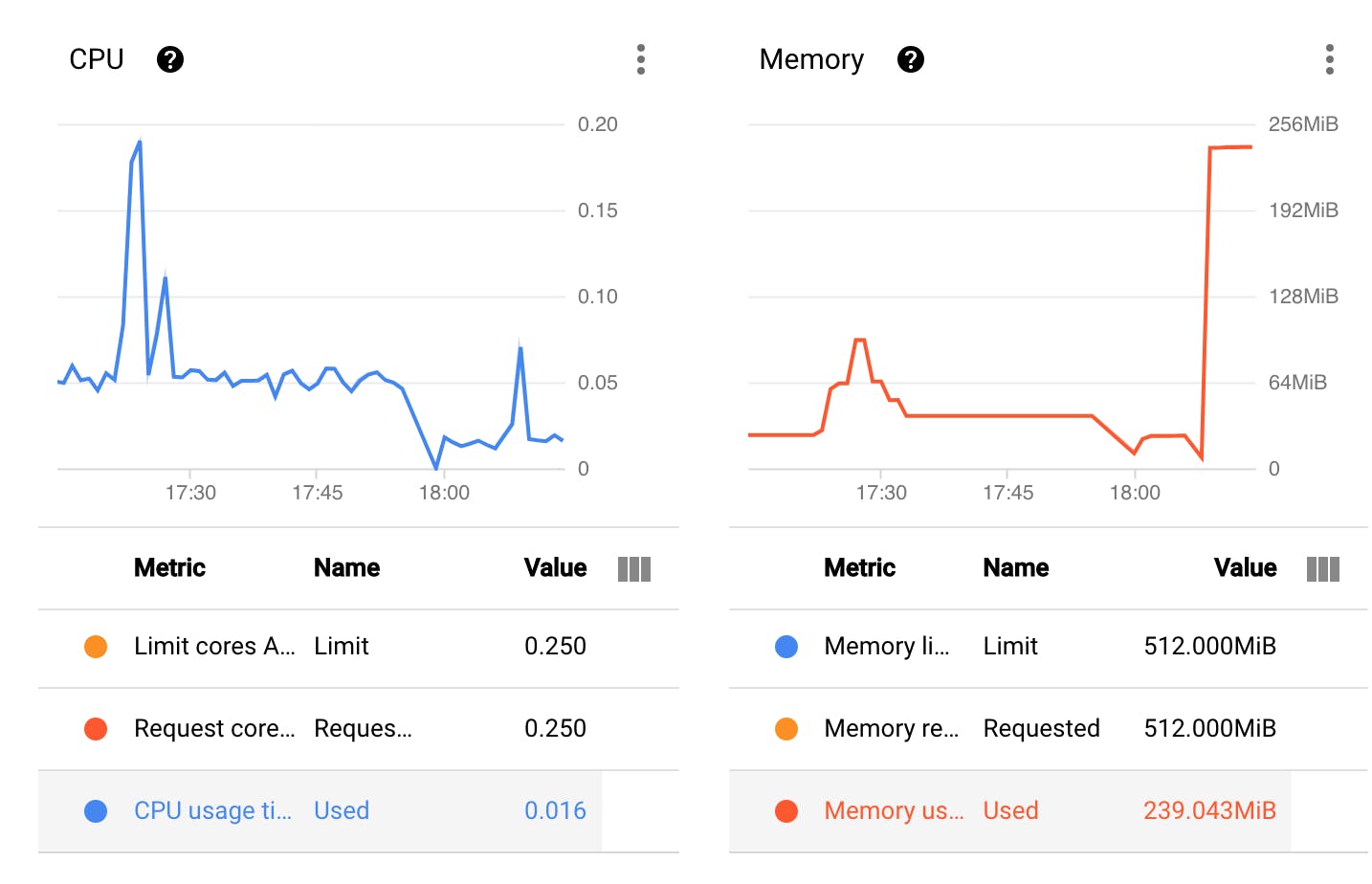

Here is the API server usage during the test

We get about 4X improvement in latency and RPS, as the test is running against on an embeddable database. This can improve further with dedicated database server.

How about we combine application caching and CDN? With application cache for 30s and CDN cache for 5s

Here is the benchmark result for cacheable API /server-and-cdn-cache, average with 9731.17 requests per second, took 10s to finish, and average latency 16.96ms

$ bombardier -c 200 -n 100000 IP_ADDRESS/server-and-cdn-cache

Bombarding IP_ADDRESS/server-and-cdn-cache with 100000 request(s) using 200 connection(s)

100000 / 100000 [=====] 100.00% 9585/s 10s

Done!

Statistics Avg Stdev Max

Reqs/sec 9731.17 2626.33 17426.49

Latency 16.96ms 19.25ms 2.02s

HTTP codes:

1xx - 0, 2xx - 100000, 3xx - 0, 4xx - 0, 5xx - 0

others - 0

Throughput: 2.77MB/s

Here is the API server usage during the test

We get about 3X improvement in latency and RPS compared with only application caching, and 12X improvement in latency, 11X improvement in RPS compared to non-cache API.

| Type | Avg RPS | Avg Latency | Time taken |

| No Cache | 922.00 | 218.86ms | 1m49s |

| Application cache | 3507.45 | 56.79ms | 28s |

| CDN + application cache | 9731.17 | 16.96ms | 10s |

Bypass cache

As we defined in the middleware, if request has Bypass-Cache header or refresh query param, we bypass the cache, S-Cache will become unreachable

Test bypass cache (with refresh query param)

curl 'IP_ADDRESS/server-cache?refresh=1' -v

# only show relevant values are here

< Content-Type: application/json

< Content-Length: 15

< Cache-Control: no-store

< S-Cache: unreachable # cache is bypassed

<

{"result":"ok"}

Test bypass cache (with Bypass-Cache header)

curl -H 'Bypass-Cache: 1' 'IP_ADDRESS/server-cache' -v

# only show relevant values are here

< Content-Type: application/json

< Content-Length: 15

< Cache-Control: no-store

< S-Cache: unreachable # cache is bypassed

<

{"result":"ok"}

Conclusion

Application caching is useful to improve API performance and reduce main database workload. It also can be use with CDN. Unlike CDN, we have full control of the data we stored, but at the same time, it has higher price tag (assume not using embeddable DB like this post) than CDN, as it needs to provision extra database instance.

Some people directly use main DB as cache DB as well to reduce the cost. There is no perfect solution, only the solution that suitable for the use case.

As you go nearer to the user, the cache is more powerful (faster) but harder to control and invalidate. As you go nearer to the data source, the cache is less powerful (less network latency is saved), but easier to control and invalidate.

Source code is available at cncf-demo/cache-server

This post of part of API performance improvement mini series 🚀

- Improve API performance with CDN

- Improve API performance with application caching (this post)